Dogs: Loglinear Model for Binary Data¶

An example from OpenBUGS [38], Lindley and Smith [47], and Kalbfleisch [45] concerning the Solomon-Wynne experiment on dogs. In the experiment, 30 dogs were subjected to 25 trials. On each trial, a barrier was raised, and an electric shock was administered 10 seconds later if the dog did not jump the barrier.

Model¶

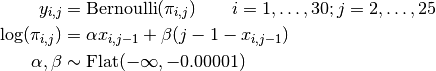

Failures to jump the barriers in time are modelled as

where  if dog

if dog  fails to jump the barrier before the shock on trial

fails to jump the barrier before the shock on trial  , and 0 otherwise;

, and 0 otherwise;  is the number of successful jumps prior to trial

is the number of successful jumps prior to trial  ; and

; and  is the probability of failure.

is the probability of failure.

Analysis Program¶

using Mamba

## Data

dogs = (Symbol => Any)[

:Y =>

[0 0 1 0 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 1 1 0 1 1 0 0 1 1 0 1 0 1 1 1 1 1 1 1 1

0 1 1 0 0 1 1 1 1 0 1 0 1 0 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 0 1 1 1 1 0 0 1 0 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 1 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 0 0 1 1 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 1 0 1 0 1 1 0 1 0 0 0 1 1 1 1 1 0 1 1 0

0 0 0 0 1 0 0 1 1 0 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 1 1 1 1 1 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 1 1 0 1 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 1 0 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 1 0 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 1 0 1 0 0 0 1 0 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 1 0 1 0 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1

0 1 0 0 0 0 1 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 1 1 0 1 0 1 1 0 1 0 1 1 1 1 1 1 1 1 1 1 1

0 0 0 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 1 0 1 0 1 1 1 1 1 1 1 1 1 1 0 0 1 1 1 1 1 1 1

0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 0 0 0 1 1 1 0 1 0 0 0 1 1 0 1 1 1 1 1 1

0 0 0 0 0 0 1 1 0 1 1 1 0 1 0 1 1 1 1 1 1 1 1 1 1

0 0 1 0 1 1 1 0 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 1 0 1 0 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 0 0 0 1 1 0 0 1 1 1 0 1 0 1 0 1 0 1 1 1 1 1 1 1

0 0 0 0 1 1 1 1 1 1 0 1 0 1 1 1 1 1 1 1 1 1 1 1 1]

]

dogs[:Dogs] = size(dogs[:Y], 1)

dogs[:Trials] = size(dogs[:Y], 2)

dogs[:xa] = mapslices(cumsum, dogs[:Y], 2)

dogs[:xs] = mapslices(x -> [1:25] - x, dogs[:xa], 2)

dogs[:y] = 1 - dogs[:Y][:, 2:25]

## Model Specification

model = Model(

y = Stochastic(2,

@modelexpr(Dogs, Trials, alpha, xa, beta, xs,

Distribution[

begin

p = exp(alpha * xa[i,j] + beta * xs[i,j])

Bernoulli(p)

end

for i in 1:Dogs, j in 1:Trials-1

]

),

false

),

alpha = Stochastic(

:(Truncated(Flat(), -Inf, -1e-5))

),

A = Logical(

@modelexpr(alpha,

exp(alpha)

)

),

beta = Stochastic(

:(Truncated(Flat(), -Inf, -1e-5))

),

B = Logical(

@modelexpr(beta,

exp(beta)

)

)

)

## Initial Values

inits = [

[:y => dogs[:y], :alpha => -1, :beta => -1],

[:y => dogs[:y], :alpha => -2, :beta => -2]

]

## Sampling Scheme

scheme = [Slice([:alpha, :beta], [1.0, 1.0])]

setsamplers!(model, scheme)

## MCMC Simulations

sim = mcmc(model, dogs, inits, 10000, burnin=2500, thin=2, chains=2)

describe(sim)

Results¶

Iterations = 2502:10000

Thinning interval = 2

Chains = 1,2

Samples per chain = 3750

Empirical Posterior Estimates:

Mean SD Naive SE MCSE ESS

A 0.783585 0.018821132 0.00021732772 0.00034707278 2940.6978

B 0.924234 0.010903201 0.00012589932 0.00016800825 4211.5969

alpha -0.244165 0.024064384 0.00027787158 0.00044330447 2946.7635

beta -0.078860 0.011812880 0.00013640339 0.00018220980 4203.0847

Quantiles:

2.5% 25.0% 50.0% 75.0% 97.5%

A 0.7462511 0.7709223 0.7836328 0.7962491 0.8197714

B 0.9018854 0.9170128 0.9245159 0.9319588 0.9445650

alpha -0.2926932 -0.2601676 -0.2438148 -0.2278432 -0.1987298

beta -0.1032678 -0.0866338 -0.0784850 -0.0704666 -0.0570308